All kind of hardware and software Troubleshooting..we provide network solutions..Registered software are available here

Saturday, April 30, 2011

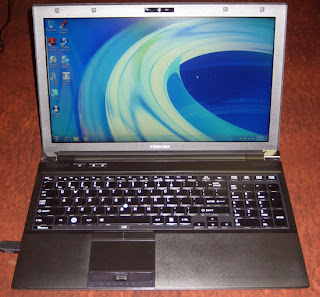

Toshiba's New Mobile Enterprise Line: The Portege R830 Sets the Standard

To say the Toshiba Portege R700 was well-received by the industry would apparently be an understatement; Toshiba brought a level of engineering acumen to bear on that machine heretofore unseen on their notebooks, and the success of the R700 and its descendants is now informing Toshiba's entire mobile business line.

Toshiba's older mobile business notebooks under their Tecra line weren't bad, but stylistically were well behind the times. Made chiefly of ABS plastic, these notebooks unfortunately didn't look like much of an improvement over, say, an HP G-series notebook, and they inherited some of the same problems that plagued Toshiba's consumer notebooks. The older Tecras are big and bulky, but since the success of the R700, Toshiba has taken the hint and completely rearchitected their 14" and 15.6" Tecra notebooks while updating the Portege. The result is the R800 series, split between the 14" Tecra R840, 15.6" Tecra R850, and 13.3" Portege R830.

Since the 13.3" Portege is basically the flagbearer of Toshiba's new line, we'll start there first. The R830 is nearly identical to its predecessor, the R700, and as such has received the fewest changes. Updated to include Intel's new Sandy Bridge processors, the R830 remains thin and light and should actually run a touch cooler than its predecessor given the slightly better thermals of Intel's new generation of chips. Toshiba has also updated the R830 to include USB 3.0, and just like the R700 it can be configured all the way up to the top of the line dual-core mobile i7. A consumer level version is also available in the R835 and it starts at $888.99. Moving to that notebook means giving up the ExpressCard port, docking capabilities, and moving down to a one-year warranty, but we suspect that will seem a reasonable trade-off for most consumers. MSRP for the Portege R830 is from $1,049 to $1,649.

The Tecra R840 and R850 on the other hand have seen massive changes. Gone are the big and bulky older chassis, replaced by a much sleeker, thinner build strongly reminiscent of the R700 and R830. Toshiba uses fiberglass-reinforced casing with a honeycomb rib structure in the R840 and R850 that substantially improves durability. In the case of the R840, Toshiba also uses the unique Airflow Cooling Technology found in the Portege. Developed by Intel and then modified by Toshiba, the notebook features what amounts to a wind tunnel with an intake and exhaust within the body, allowing for air to pass quickly and efficiently through the system while cooling the hotter components. Given the larger form factor of the R850, Toshiba opted to use more traditional cooling in that system. Both the R840 and R850 offer the new Sandy Bridge processors along with AMD Radeon HD 6470M graphics. The graphics hardware seems mild, but it allows users to plug in up to two additional screens and use these notebooks as proper mobile workstations. The R840 starts with an MSRP of $899 while the R850 starts with an MSRP of just $879.

I had the opportunity to see these new notebooks when I met with Toshiba representatives in person and they're a major step forward from older designs. These are sleek, clean, and smart designs. My chief concern is that Toshiba is releasing these notebooks into a market where they have to compete with the new designs by HP which are, frankly, stunning in person. My other complaint is a milder one, but nonetheless relevant: while the chiclet keyboard Toshiba has moved to with these new notebooks is a step in the right direction, the smooth, slightly-glossy finish on the keys isn't the best or most comfortable to use. It's true that traditional plastics may be more liable to wear out over time, but they're more comfortable in the interim. Still, competing workstation-class notebooks from Dell or HP are generally more expensive, and Toshiba is offering a great value in these notebooks. If anything, we just wish some of these innovations would trickle down into the consumer space.

Last but not least, I was able to check out Toshiba's new Mobile Monitor. This is a 14", 1366x768 screen powered entirely over USB using DisplayLink technology. I'm not a tremendous fan of DisplayLink, but I can definitely see using this screen in a pinch. Other mobile, USB-powered screens have tended to simply be too small, but at 14-inches the Mobile Monitor enters the realm of usability. It is built into a classy storage case that folds out to use as a stand with smart cable routing around the back. It's the kind of thing that must be experienced in person so you can grasp just how useful a device like this might be; if I travelled more it's fair to say I'd invest in one for myself. At $199 the MSRP would seem unreasonable for a garden variety 14" monitor, but given the portability and design the price seems sound.

Read more...

Antec HCG 750W: Built for Gamers?

However, most of the Antec products we've reviewed have been higher-end designs with unique features or abilities—for instance, there was the "sandwich" PCB in the HCP-1200 and the environmentally friendly design of the EarthWatts Green 380W. There's nothing out of the ordinary in the HCG data sheets other than the powerful +12V rails. The HCG series seems to represent most PSUs: it's ordinary and "boring". So what makes this PSU into an Antec product?

For starters, plenty of manufacturers have attractive power supplies, but the robust case and red highlights are at least unusual. We've seen designs like this in the higher cost/wattage PSUs like the 850W Enermax Revolution85+ and HuntKeys' X7 1200W. Now Antec brings this aesthetic to lower wattages and prices.

That's all well and good, but Antec cares about quality. They have chosen very expensive capacitors from Rubycon. In addition, as our Antec contact Christoph (Business Unit Manager at Antec) likes to say, "more is better", meaning that two main caps are better than one. The ball bearing fans also last longer than cheap sleeve bearing models, which is another minor upgrade. While these simple elements aren't unusual for PSUs in this price range, they do set our expectations and we're expecting a good showing from the HCG-750.

On the following pages we will see if the caps can reduce ripple and noise and if the fan runs quietly. Moreover, good results can help compensate for the non-modular cables, as they are a disadvantage for most customers. Let's begin with a closer look to its characteristics and delivery contents.

Read more...

White iPhone 4 Debuts, Slightly Thicker

"Apple is so visible that they are micro analyzed," said Rob Enderle, principal analyst at The Enderle Group. "I don't think consumers are going to care about this. That little extra amount of thickness is hardly going to spoil their experience [with the new white iPhone 4], but it's just the nature of being Apple."

Proving once again that anything Apple does is immediately put under a microscope, headlines are making their way across the Internet regarding the thickness of the just-debuted white iPhone 4.

At long last, Apple rolled out a white iPhone 4 on Thursday. Some estimates figure Apple may sell 1.5 million white iPhone 4s. It adds a new twist to the iconic device Relevant Products/Services -- and since nobody really knows when the iPhone 5 will make its way to market some consumers may opt for the shiny new technology Relevant Products/Services toy now.

They may buy it, that is, if they aren't too disappointed that the white iPhone 4 is a smidgeon thicker than its black predecessor. Indeed, the white model is a whopping .2mm thicker than the black one. It's hardly noticeable to the naked eye, but close up photos reveal it's a tad fatter.

Micro Analyzing Apple

It took Apple nearly a year to roll out the slightly thicker white iPhone 4. Apple encountered problem after problem producing the white iPhone. What caused the delay?

Apple senior vice president Phil Schiller told All Things Digital, that it's not easy to make a white iPhone. "There's a lot more that goes into both the material science of it -- how it holds up over time...but also in how it all works with the sensors."

The bottom line: it took Apple nine months to build a white iPhone 4 model with sufficient UV protection for its internal components. It seems white iPhones need added protection from the sun, just like fair-skinned humans.

"Apple is so visible that they are micro analyzed," said Rob Enderle, principal analyst at The Enderle Group. "I don't think consumers are going to care about this. That little extra amount of thickness is hardly going to spoil their experience with the device, but it's just the nature of being Apple. They live on such a high peak of excellence that anything that falls away from that is highlighted and exemplified."

The Privacy Scandal

For all the hoopla over the white iPhone 4, the microscope remains focused on geolocation tracking issues. Earlier this week news reports revealed that iPhones and iPads collect Relevant Products/Services geolocation data Relevant Products/Services and store it in an unencrypted file on the devices.

"These aren't smartphones; they are spy phones," said John Simpson, director of the nonpartisan, nonprofit public interest group's Privacy Project. "Consumers must have the right to control whether their data is gathered and how it is used."

The Federal Trade Commission's report, "Protecting Consumer Privacy in an Era of Rapid Change," proposed a Do Not Track mechanism last December. Since then Rep. Jackie Speier, D-CA, has introduced HR 654, the Do Not Track Me Online Act.

In California Sen. Alan Lowenthal, D-Long Beach, has introduced SB 761, a bill that would establish a Do Not Track mechanism for companies doing business Relevant Products/Services in California. A "Do Not Track" mechanism is a method that allows a consumer to send a clear, unambiguous message that one's online activities should not be tracked. Recipients of the message would be required to honor it.

"The mobile world is the wild west of the Internet where these tech giants seem to think anything goes," said Simpson. "Consumers need the same sort of strong privacy protections whether they go online via a wired device or a mobile device."

Read more...

Friday, April 29, 2011

NVIDIA Releases GeForce GT 520

Coming up hot on the heels of last week’s Radeon HD 6450 launch, today NVIDIA quietly launched the GT 520, their low-end video card for the 500 series.

It’s based on GF119, a GF11x GPU with no immediate analogue from GF10x series, but has already been shipping in mobile products as the GeForce GT 410M and 520M. In NVIDIA’s existing desktop lineup, it should replace the GeForce GT 220.

GTS 450 GT 430 GT 520 GT 220 (DDR3)

Stream Processors 192 96 48 48

Texture Address / Filtering 32/32 16/16 8/8 16/16

ROPs 16 4 4 8

Core Clock 783MHz 700MHz 810MHz 625MHz

Shader Clock 1566MHz 1400MHz 1620MHz 1360MHz

Memory Clock 902MHz (3.608GHz data rate) GDDR5 900MHz (1.8GHz data rate) DDR3 900MHz (1.8GHz data rate) DDR3 900MHz (1.8GHz data rate) DDR3

Memory Bus Width 128-bit 128-bit 64-bit 128-bit

VRAM 1GB 1GB 1GB 1GB

FP64 1/12 FP32 1/12 FP32 1/12 FP32 N/A

Transistor Count 1.17B 585M N/A 505M

Manufacturing Process TSMC 40nm TSMC 40nm TSMC 40nm TSMC 40nm

Price Point $99 ~$70 ~$60 ~$60

GF119

GF119 is largely half of a GF108 GPU, and in the process appears to be the smallest configuration possible for Fermi. In terms of functional units a single SM is attached to a single GPC, which in turn is attached to a single block of 4 ROPs and a single 64bit memory controller. Not counting the differences in clockspeeds, compared to GF108 a GF119 GPU should be half as fast in shading and geometry performance, while in any situations where the two are ROP-bound the performance drop-off should be limited to the impact of lost memory bandwidth. Speaking of which, as with GF108, GF119 is normally paired with DDR3, so with half as wide a memory bus memory bandwidth should be halved as well.

For the GT 520, the nominal clocks are 810MHz for the core and 900MHz (1.8GHz data rate) for the DDR3 memory. As with other low-end products, we wouldn’t be surprised to eventually see core clock speeds vary some. All of the cards launching today are shipping with 1GB of DDR3. And while we don’t have a card in-house to test, based on the performance of the GT 430 we’d expect performance to match if not slightly lag the Radeon HD 6450. Power consumption should also be similar; NVIDIA gives the GT 520 a TDP of 29W, while we’d expect the idle TDP to be around 10W.

Gallery: GeForce GT 520

The GT 520 is shipping immediately both in retail and to OEMs; as with other low-end products the focus is on OEM sales with retail as a side-channel. NVIDIA is not providing a MSRP for the card, but we’re seeing prices start at $60. It goes without saying that performance is most certainly going to lag similarly priced cards, primarily the GT 430 which can be found for almost as cheap after rebate.

April 2011 Video Card MSRPs

NVIDIA Price AMD

GeForce GTX 590

$700 Radeon HD 6990

GeForce GTX 580

$480

GeForce GTX 570

$320 Radeon HD 6970

$260 Radeon HD 6950 2GB

GeForce GTX 560 Ti

$240 Radeon HD 6950 1GB

$200 Radeon HD 6870

GeForce GTX 460 1GB

$160 Radeon HD 6850

GeForce GTX 460 768MB

$150 Radeon HD 6790

GeForce GTX 550 Ti

$130

$110 Radeon HD 5770

GeForce GT 430

$50-$70 Radeon HD 5570

GeForce GT 520

$55-$60 Radeon HD 6450

GeForce G 210

$30-$50 Radeon HD 5450

Read more...

Acer’s Iconia Tab A500 Joins the Honeycomb Party

The year of the tablet continues, and every major manufacturer—and many smaller parties as well—are keen to get their cut of the pie. As their entrant into the tablet market, Acer is announcing their Iconia Tab A500. We posted a short overview of the Iconia-6120 Dual-Screen notebook a few weeks ago, and it’s weird to have devices that are so wildly different in the same product family, but the Iconia Tab is a far more traditional device.

Google selected NVIDIA’s Tegra 2 platform as the target hardware for Android 3.0 (Honeycomb), so it’s little surprise that Acer will use Tegra 2 (specifically the Tegra 250 variant) as the core of the A500. Perhaps more importantly, the A500 uses a 10.1” display with a 1280x800 resolution, so it will be similar in size and form factor to the Motorola Xoom. It’s actually a bit heavier (1.69 lbs. vs. 1.61 lbs) and fractionally thicker (.52” vs. .51”) than the Xoom, but since we’re dealing with tablets rather than smartphones it’s unlikely anyone will notice. What they will notice is differences in styling; the A500 has a brushed aluminum casing that looks quite nice in the photos we’ve seen.

Gallery: Acer Iconia Tab A500

Other aspects of the device are pretty standard. Tegra 2 starts with a dual-core Cortex-A9 CPU and pairs that with NVIDIA’s ULP GeForce graphics and 1GB of RAM. There are front- (2MP) and rear-facing (5MP) cameras, an HDMI port for viewing content on an external display (1080p supported), 802.11bgn WiFi, 16GB flash memory on the initial device (with 32GB versions planned for the future), and a micro-SD expansion slot capable of accepting up to 32GB micro-SD cards. The tablet comes with two 3260mAh Li-polymer batteries rated for up to eight hours of casual gaming or HD video playback and 10 hours of WiFi Internet browsing. Another piece of hardware is the six-axis motion-sensing gyro, which can be useful for games (and detecting orientation of the tablet). Finally, there’s a built-in GPS, and Bluetooth support allows the A500 to connect to a variety of peripherals.

One of the key elements of any tablet is the display, and here’s where things are a bit fuzzy right now: Acer’s press release states that the LCD “provides an 80-degree wide viewing angle to ensure an optimal viewing experience”. Hopefully that means it’s an IPS (or similar technology) panel, so that you’re getting true 80 degrees off-center viewing in both vertical and horizontal directions. More likely (being the cynic that I am), it’s a TN panel with “160-degree” horizontal and vertical viewing angles—except we all know that the way viewing angles are rated is often far from ideal, as one only has to look at a typical TN laptop panel to know that it can’t be used from above or below. When we can get an actual unit for testing, we’ll provide full details on the display.

On the software side of things, Acer has all the usual Android 3.0 accoutrements, but they’re including a few extras. Given the Tegra 2 platform, it’s nice to see a couple of games thrown into the mix for free: Need for Speed: Shift and Let’s Golf come pre-installed—I’m a lot more interested in the former than the latter. Adobe’s Flash is also supported, but it doesn’t come pre-installed, which is easy enough to rectify. Given that Google has expressed an interest in standardizing the Android experience and avoiding fragmentation, there’s not a lot of unusual software added on the A500. Acer includes their LumiRead and Google Books apps for enjoying eBooks, Zinio for full-color digital magazines, and a trial version of Docs to Go for office documents. Naturally, users all get full access to the Android Marketplace for installing additional applications. The A500 also includes clear.fi for digital media sharing, so it can communicate over your wireless network with any other DLNA-compliant devices to share multimedia content.

While the above items aren’t necessarily major improvements over competing tablets, one aspect of the A500 is sure to turn a few heads: the device is slated to go on sale at Best Buy starting at just $450. That puts it nearly $150 cheaper than the base model Motorola Xoom, albeit with 16GB instead of 32GB of integrated storage. The Iconia Tab A500 will be available for pre-order at Best Buy starting April 14 and available in stores and online starting April 24.

Besides the core unit, Acer also has a variety of peripherals planned. First on the list is a full-sized dedicated Bluetooth keyboard ($70 MSRP). There’s also a dock/charging station with IR remote and connections for external speakers/headphones ($80 MSRP), which can hold the tablet in two different tilt positions. Last is a protective case that allows access to the connectors and ports ($40 MSRP); it also lets you prop the tablet in two positions for hands-free viewing of movies or other content.

Read more...

Amazon Apologizes for EC2 Cloud Outage

Analyst Charles King sees two key, albeit it not exactly new, lessons in the Amazon cloud outage. First, systems that rely on single points of failure will fail at some point. Second, companies whose services depend largely or entirely on third parties can do little but complain, apologize, pray and twiddle their thumbs when things go south.

Now that the dust has settled on the Amazon EC2 cloud Relevant Products/Services outage, the company is offering an apology and a credit for its role in making many popular web sites, like FourSquare, Twitter, and Netflix, unavailable.

Amazon Elastic Book Store customers in regions affected at the time of the disruption, regardless of whether their resources and application were impacted or not, are getting an automatic 10-day credit equal to 100 percent of their usage.

"We know how critical our services are to our customers' businesses and we will do everything we can to learn from this event and use it to drive improvement across our services," Amazon said in a statement. "As with any significant operational Relevant Products/Services issue, we will spend many hours over the coming days and weeks improving our understanding of the details of the various parts of this event and determining how to make changes to improve our services and processes."

Yes, Outages Happen

Most anyone familiar with data Relevant Products/Services center operations could sympathize with Amazon -- outages, even severe outages, happen, and companies are usually judged according to how quickly they respond to the problem and whether they are able to prevent similar events from occurring again, said Charles King, principal analyst at Pund IT Relevant Products/Services.

However, he added, while Amazon's cloud services are generally well regarded, patience among affected companies and their clients wore thin as the days stretched on. That was hardly surprising, he said, but it created the opportunity to consider exactly how organizations have been leveraging the EC2 cloud, and how well they've been doing it.

"Some, including Netflix and SmugMug, were hardly affected at all, largely because they had designed their environments for high availability -- in line with Amazon's guidelines -- using EC2 as merely one of several IT resources," King said. "On the other hand, those that had depended largely or entirely on Amazon for their online presence came away badly burned."

Cloud Lessons Learned

King sees two key, albeit it not exactly new, lessons in the Amazon cloud outage. First, systems that rely on single points of failure will fail at some point. Second, companies whose services depend largely or entirely on third parties can do little but complain, apologize, pray and twiddle their thumbs when things go south.

As King sees it, the fact that disaster is inevitable is why good communications Relevant Products/Services skills are so crucial for any company to develop, and why Amazon's anemic public response to the outage made a bad situation far worse than it needed to be. While the company has been among the industry's most vocal cloud services cheerleaders, King said, it seemed essentially tone deaf to the damage its inaction was doing to public perception of cloud computing.

"At the end of the day, we expect Amazon will use the lessons learned from the EC2 outage to significantly improve its service offerings," King said. "But if it fails to closely evaluate communications efforts around the event, the company's and its customers' suffering will be wasted."

Read more...

Thursday, April 28, 2011

ASUS K53E: Testing Dual-Core Sandy Bridge

in the ASUS U41JF, a worthy follow-up to the U-series’ legacy. Today we have another ASUS laptop, this time one of the first dual-core Sandy Bridge systems to grace our test bench. The K53E comes to us via Intel, and they feel it represents what we’ll see on the various other dual-core SNB laptops coming out in the near future. Unlike the Compal quad-core SNB notebook we tested back in January, this notebook is available at retail, and it comes with very impressive performance considering the price, but there’s a catch.

Intel has taken the stock K53E and fitted it with a faster i5-2520M processor, which should be a moderate performance bump from the K53E with i5-2410M and a healthy upgrade from the non-Turbo i3-2310M model. The i5-2520M runs at a stock clock speed of 2.5GHz with Turbo modes running at up to 3.2GHz; in contrast, the i5-2410M checks in at 2.3GHz with a 2.9GHz max Turbo, and the poor i3-2310M runs at a constant 2.1GHz. There are a few other changes as well, depending on which model you want to take as the baseline. The K53E-B1 comes with 6GB standard and a 640GB HDD, while the K53E-A1 comes with 4GB and a 500GB HDD; our test system has a 640GB HDD and 6GB RAM (despite the bottom sticker labeling it as a K53E-A1). Intel also installed Windows 7 Ultimate 64-bit instead of the usual Home Premium 64-bit, which means there’s no bloatware on the system—and ASUS’ standard suite of utilities is also missing.

If you want a ballpark estimate of cost for a similar laptop, the Lenovo L520 has the same i5-2520M CPU, 4GB RAM, and a 320GB HDD with Windows 7 Professional 64-bit, priced at $826. Intel’s pricing on the i5-2520M is $225, so around $800 total for the K53E would be reasonable, but like most OEMs ASUS gets better pricing for the i5-2400 series parts and thus chooses to save money there. For most users, the stock K53E-B1 will be more than sufficient, as the extra 10-15% performance increase from the CPU upgrade won’t normally show up in day-to-day use—you’d be far better off adding an SSD rather than upgrading the CPU. Here are the specs of the laptop we’re reviewing.

ASUS K53E (Intel Customized) Specifications

Processor Intel Core i5-2520M

(2x2.50GHz + HTT, 3.2GHz Turbo, 32nm, 3MB L3, 35W)

Chipset Intel HM65

Memory 1x4GB + 1x2GB DDR3-1333 CL9 (Max 8GB)

Graphics Intel HD 3000 Graphics (Sandy Bridge)

12 EUs, 650-1300MHz Core

Display 15.6" WLED Glossy 16:9 768p (1366x768)

(AU Optronics B156XW02 v6)

Hard Drive(s) 640GB 5400RPM HDD

(Seagate Momentus ST9640423AS)

Optical Drive DVDRW (Matshita UJ8A0ASW)

Networking Gigabit Ethernet (Atheros AR8151)

802.11bgn (Intel Advanced-N 6230, 300Mbps capable)

Bluetooth 2.1+EDR (Intel 6230)

Audio 2.0 Altec Lansing Speakers

Microphone and headphone jacks

Capable of 5.1 digital output (HDMI/SPDIF)

Battery 6-Cell, 10.8V, 5.2Ah, 56Wh

Front Side Memory Card Reader

Left Side 1 x USB 2.0

HDMI

VGA (D-SUB)

Gigabit Ethernet

AC Power Connection

Exhaust vent

Right Side Headphone/S-PDIF Jack

Microphone Jack

2 x USB 2.0

Optical Drive

Kensington Lock

Back Side N/A

Operating System Windows 7 Ultimate 64-bit

Dimensions 14.88" x 9.96" x 1.11-1.37" (WxDxH)

Weight 5.84 (with 6-cell battery)

Extras 0.3MP Webcam

102-Key keyboard with Numeric Keypad

Flash reader (MMC, SD, MS/Pro)

Warranty 2-year standard warranty on some models

1-year standard warranty on others

Pricing K53E-B1 (i5-2410M): Starting at $719

K53E-A1 (i3-2310M): Starting at $625

Like the U41JF, outside of the CPU we’ve already covered most of the items here. One new addition is the 640GB 5400RPM Seagate Momentus drive (previously we usually received 500GB models). With a higher areal density, sequential transfer rates will go up, but the random access speed is still going to be horrible. Also like the U41JF, there are quite a few missing features: USB 3.0, eSATA, FireWire, and ExpressCard are not here, so if you want any of those you’ll need to go elsewhere. The DVDRW, LCD, audio, and other items all typical features; the 0.3MP webcam makes the sacrifice of resolution in order to work better in lower light conditions.

There are a lot of similarities to the ASUS X72D/K72DR we looked at in October, though we’re running an Intel CPU and using a 15.6”-screen chassis this time, and there’s no discrete graphics option. Of course, the HD 5470 is no performance beast, so Intel’s HD 3000 actually posts similar results (albeit with perhaps less compatibility across a larger selection of games). Also interesting is that ASUS is using a 56Wh battery in place of the 48Wh units that have been so common; hopefully that will be the case on all of their midrange laptops going forward, though we’re still partial to the 84Wh batteries in the U-series.

The real purpose of this laptop is to get dual-core Sandy Bridge out there for the lowest possible cost. While the notebook as configured would probably need to sell for around $800, the K53E-B1 with i5-2410M is going to perform very similarly and will set you back $720. Because Intel performance a clean OS install, we also skipped out on the regular set of ASUS utilities. Power4Gear is about the only one we usually find useful, with the ability to power off the optical drive usually boosting battery life a bit relative to other laptops. Since we’re not looking at a stock K53E, though, we decided to just run the system as configured on both the hardware and software fronts.

Read more...

Puget Systems Obsidian: Solid as a Rock

Today's review unit marks our third from Puget Systems. Thus far they've all been remarkable builds and this one proves to be no exception. Designed expressly for users (including businesses) who need the most reliable machine they can get, Puget has shipped us their Obsidian tower. On paper this machine is reasonable if unexceptional, but the choices behind its design are anything but ordinary.

If you read our review of the Puget Systems Deluge Mini gaming machine, some of this configuration is going to seem a bit like deja vu. We mentioned in that review that Puget qualifies and chooses components through fairly rigorous testing and data collection, and we've been able to actually look at some of their data thanks to their CEO, Jon Bach. The seemingly unremarkable Obsidian line is most emblematic of that philosophy. Geared specifically towards enterprise and government use, the Obsidian is designed and backed expressly for maximum reliability.

Puget Systems Obsidian Specifications

Chassis Antec Mini P180

Processor Intel Core i5-2500K (4x3.3GHz, 32nm, 6MB L3, 95W)

Motherboard ASUS P8H67-M EVO (Rev. 3.0) Motherboard with H67 chipset

Memory 2x4GB Kingston DDR3-1333 @ 1333MHz (expandable to 16GB)

Graphics Intel HD Graphics 3000

Hard Drive(s) Western Digital Caviar Black 1TB SATA 6Gbps HDD

Optical Drive(s) ASUS DVD+/-RW Drive

Networking Realtek PCIe Gigabit Ethernet

Audio Realtek ALC892 HD Audio

Speaker, mic, line-in, and surround jacks for 7.1 sound

Optical out

Front Side 2x USB 2.0

eSATA

Headphone and mic jacks

Optical drive

Top -

Back Side PS/2

6x USB 2.0

2x eSATA

6-pin FireWire

DisplayPort

HDMI

VGA

DVI-I

Optical out

2x USB 3.0

Ethernet

Speaker, mic, line-in, and surround jacks for 7.1 sound

Operating System Windows 7 Professional 64-bit SP1

Dimensions 8.3" x 17.2" x 17.1" (WxDxH)

Weight 20.9 lbs (case only)

Extras Antec TP-650 650W Power Supply

Scythe Katana 3 Air Cooler

Warranty 1-year limited parts warranty and lifetime labor and phone support

2-year and 3-year warranties available

Pricing Obsidian starts at $1,149

Review system configured at $1,307

On paper, the Obsidian is going to seem pretty unexciting. The included Intel Core i5-2500K has four physical cores specced to run at 3.3GHz, up to 3.7GHz in turbo, along with 6MB of L3 cache, but the real reason Puget opted for it in this build was due largely to Intel's freakishly bizarre market segmentation on their Sandy Bridge desktop processors. Intel opted to castrate the Sandy Bridge integrated graphics on every desktop chip except the ones no one would care about them on: the unlocked K-series processors. Given the decision to rely on integrated graphics for this build (especially because the ASUS P8H67-M EVO motherboard has every type of modern display connection available), it's easy to understand why the i5-2500K was chosen.

The rest of the parts are going to appear just as unexceptional, but when you check out the configurator on their site, you'll notice there are even less options for parts in the Obsidian than there are for any of their other machines, and this is by design. The Obsidian is very specifically meant for enterprise-class work with an extremely low noise level and power draw. As a result the industry standard 1TB Western Digital Caviar Black is a given, and as with the Deluge Mini only Intel SSDs are offered.

Again, pay attention to the details. Thanks to the ASUS P8H67-M EVO the Obsidian has every type of modern connection you could conceivably ask for--though it's fair to suggest that in enterprise situations serial or parallel connectivity may yet be required, as some larger businesses have a tendency to keep old hardware on hand without moving forward with the times. The Antec Mini P180 coupled with the Scythe Katana 3 air-cooler ensures the system runs cool and quiet.

Read more...

Verizon Restores LTE, But Puts Second 4G Phone on Hold

The outage on Verizon Wireless' 4G Long Term Evolution network has ended, but new LTE phones cannot be activated. Verizon postponed the launch of its second LTE smartphone, Samsung's Droid Charge. Despite the outage, the load on Verizon's LTE network is still low, with few users, an analyst said, but data usage is climbing fast.

Verizon Wireless said Thursday that the outage on its fledgling high-speed 4G Long Term Evolution network has ended. But the wireless carrier postponed the launch of its newest LTE smartphone Relevant Products/Services.

"Our 4G LTE network is up and running," Verizon spokesperson Jeff Nelson told us in an e-mail. "Our network engineers and vendors quickly identified the issue and solved it."

Reconnect Your Modem

"Customers using the Thunderbolt have normal service," Nelson said, referring to HTC's LTE smartphone, the only 4G handset currently available from Verizon. "Laptop users with USB modems may need to reconnect to the network when moving between 3G and 4G. This will continue to improve."

The company declined to comment on the cause of the snafu, which started Wednesday, days after the network's LTE footprint was expanded to six new markets, for a total of 46 metropolitan areas. LTE devices can roam to 3G coverage when leaving an LTE area. Rival AT&T warned in December that this roaming could cause "jarring speed degradation" for LTE customers unless 3G networks are upgraded, as AT&T says it is doing before moving to LTE.

Verizon told Thunderbolt customers via Twitter on Wednesday that their data Relevant Products/Services speeds would temporarily be slower because of the outage, reverting to the 1xrtt standard that preceded 3G. Voice calls were not affected.

The company also said that activations of new LTE phones will not be possible, which may explain why the launch of the Samsung Droid Charge was delayed, with no new date given. Verizon has announced 10 devices, including smartphones, USB modems, and Wi-Fi hot spots, for the LTE network. Verizon says LTE users can expect average data rates of five to 12 megabits per second for downloads and two to five Mbps for uploads in "real-world, fully loaded network environments."

Verizon hopes to serve 175 markets with LTE coverage by the end of the year.

Explosion of Data

This week's snafu isn't a near-term issue for Verizon LTE customers, said Weston Henderek, lead wireless analyst at Current Analysis.

"Overall, the load on the network is still relatively low, given that they just started launching LTE phones, and there are not many users on the network," Henderek said. "However, in the long run Verizon Wireless will face the same issue with its LTE network that it and its major competitors have faced on the 3G side. That is, that data usage is exploding at such a high pace that the carriers are having a hard time keeping up with capacity demands. Verizon is claiming that they are on top of this issue, but that remains to be seen, based on how quickly data usage grows."

Making matters worse, Henderek noted, is that all major wireless carriers face a looming spectrum crunch that could affect their networks further if more is not made available soon by the Federal Communications Commission.

Read more...

Wednesday, April 27, 2011

Sparrow: An OS X IMAP Client for Gmail Users

The best hardware can be made that much better by that one essential program you can't live without - with that in mind, I'd like to write a bit about some software for you today.

If you’re a Mac power user, there’s a good chance that you’ve heard of Sparrow, a new IMAP client for OS X that has garnered quite a bit of praise since it was first offered in beta form a few months ago. It brings many of Gmail’s best features to an email client, and since version 1.1, it can bring those same features to non-Gmail IMAP accounts as well.

If you’ve ever connected an IMAP email client like Apple Mail or Mozilla Thunderbird to Gmail, you know it can do a few weird things: Deleting an email from the client will usually remove it from the Inbox, but won’t actually delete it from the server – the email lives on in the All Mail folder instead of actually going away. IMAP clients also create and use their own Drafts, Trash, and Sent folders by default, instead of Gmail’s built-in folders. Because of this, drafts saved in the IMAP client can’t be edited in the Web client (and vice-versa) extraneous labels are created in the web client, and in general things just don’t mesh as smoothly as they should unless you know which settings to change in which client.

Enter Sparrow, which was built from the ground-up with Gmail users in mind – it shows threaded email conversations, it works around all of Gmail’s aforementioned idiosyncrasies, and it does so in an appealing minimalist package without any additional finessing or configuring. The 1.1 update even adds support for the Priority Inbox feature if you have it enabled.

Visually and functionally, Sparrow draws primarily from two things: the Twitter for Mac app, and the iOS mail client.

Sparrow displays all of your accounts vertically on the left-hand side of the app – to switch accounts, just click a different profile picture. Buttons are available for your main folders (Inbox, Sent, Drafts, Trash) and for basic functions (New message, Reply, Archive, Delete). Double clicking an email thread will open it up in a second window, where you can read all the messages, reply, and add or change labels.

If you want things to get even more compact, you can enable the “hide message preview” setting and fit even more messages into a given window.

If you hit the button in the bottom-right corner of the app, a message pane will slide out and you can view your emails without opening a second window. In this view, Sparrow is not much different from the iPad mail client in landscape mode.

You can star your messages as you normally would in Gmail, and your labels are denoted by small color-coded triangles. These triangles are pretty subtle and they don’t sync to the colors you have your labels set to in the web client, but if you’re a heavy label user you’ll probably get over these gripes.

Sparrow works best if you use Gmail without too many of the Google Labs add-ons enabled. People who rely heavily on, say, Multiple Inboxes or Undo Send (a personal favorite for so, so many reasons) may miss them in Sparrow.

Read more...

AMD's Radeon HD 6450: UVD3 Meets The HTPC

AMD’s Northern Islands family is composed of four GPUs, roughly divided into two categories. At the top is the 6900 series powered by Cayman, AMD’s first VLIW4 GPU. Below Cayman are three more GPUs, all derived from the VLIW5 Evergreen generation(5000 series).

The first of these GPUs was Barts, which is the basis of the 6800 series that launched back in October of 2010. However up until now we haven’t seen the other two mystery GPUs in the retail market. Today that starts to change.

The final two Northern Island GPUs are Caicos and Turks. They have been available in the OEM market for both desktop and mobile products since the beginning of the year, but as is often common with low-end/high-volume GPUs, a retail presence comes last instead of first. AMD is finally giving Caicos its first retail presence today; it will be powering the new Radeon HD 6450. Packing all the upgrades we saw with Barts last year, it will effectively be replacing the Radeon HD 5450. But how well does AMD’s latest stand up in the crowded low-end market? Let’s find out.

AMD Radeon HD 5670 AMD Radeon HD 5570 AMD Radeon HD 6450 (GDDR5) AMD Radeon HD 5450

Stream Processors 400 400 160 80

Texture Units 20 20 8 8

ROPs 8 8 4 4

Core Clock 775MHz 650 750MHz 650MHz

Memory Clock 1000MHz (4000MHz data rate) GDDR5 900MHz (1800MHz data rate) DDR3 900MHz (3.6GHz data rate) GDDR5 800MHz (1600MHz data rate) DDR3

Memory Bus Width 128-bit 128-bit 64-bit 64-bit

VRAM 1GB / 512MB 1GB 512MB 1GB / 512MB

Transistor Count 627M 627M 370M 292M

TDP 61W 42.7W 27W 19.1W

Manufacturing Process TSMC 40nm TSMC 40nm TSMC 40nm TSMC 40nm

Price Point $65-$85 $50-$70 $55 $25-$50

Although it’s likely redundant to say that the GPU market is on a constant forward march in performance, it’s a very prudent analogy when discussing the Radeon HD 6450. With the launch of a new generation of integrated GPUs from both Intel and AMD in the last few months, the tail-end of the line took a big step forward and now everything else must move forward to keep pace. AMD’s previous low-end product, the 80SP Radeon HD 5450, is effectively matched by Intel’s HD 3000; meanwhile you can get as many SPs in an AMD Zacate APU, although performance isn’t quite enough to catch the 5450. Regardless, when iGPUs can deliver the performance of the lowest-end dGPU, a new low-end dGPU is required. This is Caicos.

At the lower end of the GPU market we’re accustomed to seeing a very large gap between the lowest GPU and the next model higher; with the 5000 series it was the difference between the 80SP Cedar and the 400SP Redwood GPUs. The performance drop-off is quite severe, but it’s what’s necessary to make a GPU small enough and cheap enough to meet the needs of the extreme budget segment of the market. Thus while the latest generation of iGPUs requires AMD to produce a faster low-end GPU, they still need to keep it cheap enough for the market, and as such there won’t be a radical overhaul.

At the end of the day Caicos and the Radeon HD 6450 it’s based on are a larger version of the 5450 with Barts’ technology improvements. Coming from the 5450 AMD has doubled the SIMD count from one SIMD to two, doubling the number of SPs from 80 to 160. Meanwhile the number of texture units per SIMD has decreased from eight per SIMD to four per SIMD, resulting in the same eight texture units, but now split between the two SIMDs. This is now consistent with the rest of AMD's lineup, as Cedar/5450 had twice as many texture units in its 1 SIMD as other 5000/6000 parts normally have per SIMD.

The ROP side of the equation has not been changed however, pairing the 160SP compute core with the same set of four ROPs we saw on the 5450. What has changed on the ROP/memory side is support for GDDR5; while we will see DDR3 6450 cards too, AMD is more or less using GDDR5 from top to bottom now. For the GDDR5 6450 the core clock is 750MHz and the memory clock is 900MHz (3.6GHz data rate), so not only does the 6450 have more SIMDs than the 5450, but it’s clocked faster by 100MHz and has over twice the memory bandwidth too.

These changes give it a major leg-up on the 5450 while still keeping the GPU size manageable. The transistor count and die size has gone up as one would expect; the 5450 was 292M transistors for a die size of 59mm2, while the 6450 is 370M transistors at 67mm2. So the 6450 will likely cost more for AMD to produce, but only marginally so. TDP has also gone up from 6.4W at idle and 19W at load to 9W at idle and 27W at load, mostly due to the higher power consumption of GDDR5. 27W is still easily handled by passive coolers, and we should see a number of both actively and passively cooled cards.

AMD has put the MSRP of the 6450 at $55. This will cover both the 512MB GDDR5 and 1GB DDR3 varieties. Pricing of low-end cards rarely toes the line, so expect prices to be all over the place. At $55 the market is quite packed, so AMD’s competition is going to include the 5450, the 5550/5570, the GT 220, and even a few budget-priced GT 430 cards. A few of these cards are going to be quite a bit faster than the 6450—ultimately the economic advantage of a small GPU is more present in high-volume OEM sales than it is in retail sales.

In any case, the one thorn in the side of the 6450 is that it’s a soft launch. While all of our data is applicable to the existing similar OEM cards, retail cards won’t be showing up until the 19th. Honestly we’re a bit confused as to why AMD is soft launching the 6450 given that it doesn’t have any immediate competition—the more insidious reasons usually attached to a soft launch are that it’s to keep potential customers from buying a competitor’s product, but it’s not as if NVIDIA has recently launched a similar product. Anyhow, your guess is as good as ours, but it’s unfortunate to see AMD doing a soft launch after doing so well in the mid-range and high-end markets this year.

April 2011 Video Card MSRPs

NVIDIA Price AMD

GeForce GTX 590

$700 Radeon HD 6990

GeForce GTX 580

$480

GeForce GTX 570

$320 Radeon HD 6970

$260 Radeon HD 6950 2GB

GeForce GTX 560 Ti

$240 Radeon HD 6950 1GB

$200 Radeon HD 6870

GeForce GTX 460 1GB

$160 Radeon HD 6850

GeForce GTX 460 768MB

$150 Radeon HD 6790

GeForce GTX 550 Ti

$130

$110 Radeon HD 5770

GeForce GT 430

$50-$70 Radeon HD 5570

GeForce GT 220

$55 Radeon HD 6450

GeForce G 210

$30-$50 Radeon HD 5450

Read more...

Apple's White iPhone 4 Is Coming -- Only a Year Late

Better late than never, Apple might say as it prepares to roll out a white iPhone 4 nearly a year after it was expected. The white iPhone 4 will be available at AT&T and Verizon Wireless, and may be a way to keep customers interested until Apple's iPhone 5 arrives in the fall. The white iPhone 4 and the iPad 2 will also be available overseas.

At long last, Apple is rolling out a white iPhone 4. The white model of the popular device Relevant Products/Services will make its way to market on Thursday via Apple's online store, retail stores, carrier stores, and other authorized retailers.

"The white iPhone 4 has finally arrived and it's beautiful," said Philip Schiller, Apple's senior vice president of worldwide product marketing. "We appreciate everyone who has waited patiently while we've worked to get every detail right."

A Year Later ...

Waiting patiently may be an understatement. It took Apple nearly a year to roll out the white iPhone 4. Apple encountered problem after problem producing the white iPhone. Although AT&T was supposed to get an exclusive on the white iPhone, Verizon Wireless customers can also buy the device. The white iPhone 4 may be a way to hold off consumers waiting for the iPhone 5, which news reports suggest is delayed until the fall.

"Another unicorn dies as the white iPhone 4 finally makes an appearance," said Michael Gartenberg, an analyst at Gartner. "What I find fascinating about this is that it's a white iPhone and its introduction is garnering this much attention. Once again it shows that pretty much anything Apple does is going to be put under a microscope, analyzed and reported on."

White models of the iPhone 4 will be available in more than 20 countries this week. Meanwhile, Apple is expanding the audience for its iPad Relevant Products/Services 2. The second generation of the tablet Relevant Products/Services will begin selling in Japan on Thursday and in Hong Kong, Korea, Singapore and eight additional countries on Friday.

Music Service Rumored

Everything Apple does -- or is even rumored to do -- is indeed headline material for technology Relevant Products/Services magazines and even mainstream news outlets. And there are plenty of Apple rumors this week alone. One of those rumors is T-Mobile's quest for its own iPhone.

It remains to be seen if T-Mobile will get its iPhone 4 before Apple rolls out an iPhone 5, but some observers are suggesting it may soon begin offering a white model. And a Photoshopped image of the iPhone 5 leaked earlier this week still has people talking. The image is based on a sketch former Engaged chief Joshua Topolsky saw and posted on his blog.

The image circulating the web offers a teardrop design that's thicker at the top than the bottom. The screen is a tad larger at 3.7 inches. Some observers are betting Apple is going to take a page from its MacBook laser-manufacturing machinations and carve the device out of aluminum.

Beyond mobile devices, Apple is rumored to be readying a fee-based music service for the cloud Relevant Products/Services. The rumor mill says Apple will initially roll out the service for free, but eventually charge $20 a year to let consumers do what Amazon.com launched at the end of March. Amazon launched Amazon Cloud Drive, Amazon Cloud Player for Web, and Amazon Cloud Player for Android. But iPhone users were left without a solution Relevant Products/Services.

Read more...

Tuesday, April 26, 2011

The OCZ Vertex 3 Review (120GB)

SandForce was first to announce and preview its 2011 SSD controller technology. We first talked about the controller late last year, got a sneak peak at its performance this year at CES and then just a couple of months ago brought you a performance preview based on pre-production hardware and firmware from OCZ.

Although the Vertex 3 shipment target was originally scheduled for March, thanks to a lot of testing and four new firmware revisions since I previewed the drive, the officially release got pushed back to April.

What I have in my hands is retail 120GB Vertex 3 with what OCZ is calling its final, production worthy client firmware. The Vertex 3 Pro has been pushed back a bit as the controller/firmware still have to make it through more testing and validation.

I'll get to the 120GB Vertex 3 and how its performance differs from the 240GB drive we previewed not too long ago, but first there are a few somewhat-related issues I have to get off my chest.

The Spectek Issue

Last month I wrote that OCZ had grown up after announcing the acquisition of Indilinx, a SSD controller manufacturer that was quite popular in 2009. The Indilinx deal has now officially closed and OCZ is the proud owner of the controller company for a relatively paltry $32M in OCZ stock.

The Indilinx acquisition doesn't mean much for OCZ today, however in the long run it should give OCZ at least a fighting chance at being a player in the SSD space. Keep in mind that OCZ is now fighting a battle on two fronts. Above OCZ in the chain are companies like Intel, Micron and Samsung. These are all companies with their own foundries and either produce the NAND that goes into their SSDs or the controllers as well. Below OCZ are companies like Corsair, G.Skill, Patriot and OWC. These are more of OCZ's traditional competitors, mostly acting as assembly houses or just rebadging OEM drives (Corsair is a recent exception as it has its own firmware/controller combination with the P3 series).

By acquiring Indilinx OCZ takes one more step up the ladder towards the Intel/Micron/Samsung group. Unfortunately at that level, there's a new problem: NAND supply.

NAND Flash is not unlike any other commodity. Its price is subject to variation based on a myriad of factors. If you control the fabs, then you generally have a good idea of what's coming. There's still a great deal of volatility even for a fab owner, process technologies are very difficult to roll out and there is always the risk of issues in manufacturing, but generally speaking you've got a better chance of supply and controlled costs if you're making the NAND. If you don't control the fabs, you're at their mercy. While buying Indilinx gave OCZ the ability to be independent of any controller maker if it wanted to, OCZ is still at the mercy of the NAND manufacturers.

Intel NAND

Currently OCZ ships drives with NAND from four different companies: Intel, Micron, Spectek and Hynix. The Intel and Micron stuff is available in both 34nm and 25nm flavors, Spectek is strictly 34nm and Hynix is 32nm.

Each NAND supplier has its own list of parts with their own list of specifications. While they're generally comparable in terms of reliability and performance, there is some variance not just on the NAND side but how controllers interact with the aforementioned NAND.

Approximately 90% of what OCZ ships in the Vertex 2 and 3 is using Intel or Micron NAND. Those two tend to be the most interchangeable as they physically come from the same plant. Intel/Micron have also been on the forefront of driving new process technologies so it makes sense to ship as much of that stuff as you can given the promise of lower costs.

Last month OWC published a blog accusing OCZ of shipping inferior NAND on the Vertex 2. OWC requested a drive from OCZ and it was built using 34nm Spectek NAND. Spectek, for those of you who aren't familiar, is a subsidiary of Micron (much like Crucial is a subsidiary of Micron). IMFT manufactures the NAND, the Micron side of it takes and packages it - some of it is used or sold by Micron, some of it is "sold" to Crucial and some of it is "sold" to Spectek. Only Spectek adds its own branding to the NAND.

OWC published this photo of the NAND used in their Vertex 2 sample:

I don't know the cause of the bad blood between OWC and OCZ nor do I believe it's relevant. What I do know is the following:

The 34nm Spectek parts pictured above are rated at 3000 program/erase cycles. I've already established that 3000 cycles is more than enough for a desktop workload with a reasonably smart controller. Given the extremely low write amplification I've measured on SandForce drives, I don't believe 3000 cycles is an issue. It's also worth noting that 3000 cycles is at the lower end for what's industry standard for 25nm/34nm NAND. Micron branded parts are also rated at 3000 cycles, however I've heard that's a conservative rating.

If you order NAND from Spectek you'll know that the -AL on the part number is the highest grade that Spectek sells; it stands for "Full spec w/ tighter requirements". I don't know what Spectek's testing or validation methodology are but the NAND pictured above is the highest grade Spectek sells and it's rated at 3000 p/e cycles. This is the same quantity of information I know about Intel NAND and Micron NAND. It's quite possible that the Spectek branded stuff is somehow worse, I just don't have any information that shows me it is.

OCZ insists that there's no difference between the Spectek stuff and standard Micron 34nm NAND. Given that the NAND comes out of the same fab and carries the same p/e rating, the story is plausible. Unless OWC has done some specific testing on this NAND to show that it's unfit for use in an SSD, I'm going to call this myth busted.

Read more...

Westmere-EX: Intel Improves their Xeon Flagship

Intel announced that their flagship server processor, the Xeon Nehalem-EX, is being succeeded by the Xeon Westmere-EX, a process-shrinking " tick" in Intel's terminology. By shrinking Intel's largest Xeon to 32nm, the best Westmere-EX Xeon is now clocked 6% higher (2.4GHz versus 2.26GHz), gets two extra cores (10 versus 8) and has a 30MB L3 (instead of 24MB).

As is typical for a tick, the core improvements are rather subtle. The only tangible improvement should be the improved memory controller that is capable of extracting up to 20% more bandwidth out of the same DIMMs. The Nehalem-EX was the first quad-socket Xeon that was not starved by memory bandwidth, and we expect that the Westmere-EX will perform very well in bandwidth limited HPC applications.

With the launch of Westmere-EX (and Sandy Bridge on the consumer side before it), it appears Intel is finally ready to admit that their BMW-inspired naming system doesn't make any sense at all. They've promised a new, "more logical" system that will be used for the coming years. The details of the new Xeon naming system are presented in the image below.

There is some BMW-ness left (e.g. the product line 3-5-7), but the numbers make more sense now. You can directly derive from the model number the maximum number of sockets, the type of the socket, and whether the CPU is low-end, midrange, or high-end. Intel also has the "L" suffix present for low power models.

Read more...

Android Devices Popular, But Developer Interest Is Falling

Fragmentation is threatening Google's popular Android operating system as consumers buy devices but developers move away. Nielsen found 31 percent of U.S. handset buyers want an Android device, compared to 30 percent for Apple's iPhone. But developer interest in Android handsets fell to 85 percent, and to 71 percent for Android tablets.

Nielsen reports that consumer interest in smartphones running Google's Android is rising in the U.S., with slightly more prospective buyers looking to buy an Android handset than Apple's iPhone. According to its latest survey, 31 percent of consumers who plan to get a new smartphone Relevant Products/Services indicated Android as their preferred operating system, while 30 percent favored Apple's iPhone and 11 percent favored a Research In Motion BlackBerry.

By contrast, during the same period last year, 33 percent of respondents wanted an iPhone, 26 percent favored Android, and 13 percent a BlackBerry. Moreover, Android's rise in popularity became even clearer when Nielsen surveyed consumers who purchased a smartphone recently.

"Half of those surveyed in March 2011 who indicated they had purchased a smartphone in the past six months said they had chosen an Android device Relevant Products/Services," Nielsen researchers wrote in a blog. "A quarter of recent acquirers said they bought an iPhone, and 15 percent said they had picked a BlackBerry phone."

Developers Wary of Fragmentation

Though Android's star is clearly on the rise in the U.S., some developers are frustrated with Google's platform. According to a new survey by Appcelerator and IDC, interest in software development for Android smartphones fell two points to 85 percent in the first half of April compared to three months earlier, and interest in Android tablets declined three points to 71 percent.

An issue that Google needs to address -- and which presents a potential opportunity for rivals Microsoft Relevant Products/Services, Hewlett-Packard, Nokia and RIM -- is fragmentation, the survey's authors observed, with Android developers facing multiple versions, skill sets and devices. Moreover, tepid interest in the tablets running Android is chipping away at Google's momentum, they said.

"Interest in developing for Android tablets jumped 12 points in our last quarterly survey conducted in January after 85 new tablets were announced at CES," they wrote. "Enthusiasm waned through the remainder of [the first quarter], however, and Android has fallen back three points to 71 percent of respondents saying they are 'very interested' in the tablet Relevant Products/Services OS."

Other Issues

Although Android's popularity decline in the development community isn't huge, it does suggest that developer interest in Android has reached a plateau. According to the survey, 63 percent of respondents said device fragmentation in Android poses the biggest risk to Google's platform, followed by weak initial traction in tablets (30 percent) and multiple Android app stores (28 percent).

"On the one hand, free availability of code and the flexibility that OEMs have is unprecedented, and has attracted many of them to make many devices," noted Al Hilwa, director of applications software development at IDC. "On the other hand, there are a variety of issues such as the overall fit and finish of the platform, the fragmentation of the devices, and the poor quality of apps in terms of data Relevant Products/Services leakage, privacy or even malware."

Moreover, OEMs adopting Android must contend with legal issues arising from a number of lawsuits filed against Google as well as individual handset makers by Oracle, Microsoft, Apple and others. Additionally, handset makers face the overall challenge of figuring out "how to differentiate [Android] when everyone has the exact thing you have," Hilwa said.

By contrast, interest in Apple's iOS remains high, with 91 percent of developers saying they are "very interested" in iPhone development. What's more, 86 percent of respondents said they are very interested in developing for the iPad Relevant Products/Services, the report said.

Read more...

Monday, April 25, 2011

Seagate GoFlex Slim 320GB: The World's Thinnest External HDD

As a desktop user I never really jumped on the external storage craze. I kept a couple of terabyte drives in RAID-0 inside my chassis and there's always the multi-TB array in the lab in case I needed more storage. External drives were always neat to look at, but I never really needed any. My notebook's internal storage was always enough.

With the arrival of Sandy Bridge in notebooks however I've given the notebook as a desktop replacement thing a try. I've got enough random hardware if I need a fast gaming machine in a pinch, but for everything else I'm strictly notebook these days. As a result I've come to realize just how precious portable storage is. Most reasonably portable notebooks have one usable 2.5" bay at most (two if you don't mind sacrificing an optical drive). Network storage is great but what if you need something portable on the go with you?

I'm obviously a staunch advocate of spending your internal real estate on an SSD, but if you need the space you've gotta go mechanical for your external storage. If portability is what matters, an external 2.5" hard drive can be quite attractive as they're lightweight and can be powered over USB.

In the 2.5" world there are three predominant thicknesses available: 7mm, 9.5mm and 12.5mm. Most notebook drives are 9.5mm. You'll notice that Intel even ships many of its SSDs with a removable spacer to make them 9.5mm tall in order to maintain physical compatibility with as many notebooks as possible:

That black trim is removable for use in 7mm bays

Thicker drives are needed to accommodate more platters inside, but as platter densities increase so do the capacities of thinner drives. A couple of years ago Seagate announced the world's first 7mm thick 2.5" hard drive and earlier today, it announced the thinnest external 2.5" drive: the GoFlex Slim.

Originally called the GoFlex Thin at CES earlier this year, the GoFlex Slim measures 9mm tall thanks to its internal 7200RPM 7mm SATA drive.

The GoFlex Slim is actually a two-part device. There's the drive itself and a detachable GoFlex USB 3.0 adapter with a white LED power/activity light. The LED is always illuminated by default and lightly throbs when you access the disk. Remove the adapter and you can plug the GoFlex Slim into any other GoFlex compatible device. Seagate has also opened up the GoFlex standard so other manufacturers can build and ship GoFlex compatible devices royalty-free.

The drive chassis is simply glued together. A thin enough tool wedged in between the top cover and the rest of the chassis is good enough to get you inside. Once open you'll notice a pretty simple design:

The GoFlex Slim is nothing more than a 320GB Momentus Thin in a slim aluminum case, no different than the other GoFlex drives we've reviewed. The Momentus Thin spins at 7200RPM, has a 16MB on-board cache and is of course a single platter drive.

The drive comes pre-formatted with a single NTFS partition and a copy of Memeo backup software. For the Mac users among us Seagate also includes a Paragon NTFS driver as well as a Mac version of Memeo Backup.

The GoFlex Slim ships with a short 14" USB 3.0 cable that can obviously be used with USB 2.0 ports. The 320GB drive retails for $99.99.

Just as we saw at CES earlier this year, you can expect a HFS+ formatted Mac version to ship with a silver chassis in the not too distant future.

Read more...

PlayStation Network Outage Blamed on 'External Intrusion'

With gamers fuming, Sony hasn't said when its PlayStation Network and Qriocity music service will be back on line. But Sony said "an external extrusion" caused both services to be shut down last week. Despite speculation, the Anonymous hackers group said on its web site that it wasn't responsible for the PlayStation Network and Qriocity outage.

Sony's PlayStation Network and its Qriocity music service were still down Monday afternoon, with no word on when they will be back online. On Friday, the company reported that the downtime was caused by "an external intrusion" to both services.

Patrick Seybold, senior director of corporate communications Relevant Products/Services and social media, posted on the company's PlayStation Blog on Friday that, "in order to conduct a thorough investigation and to verify the smooth and secure Relevant Products/Services operation of our network services going forward," both PlayStation Network and Qriocity were turned last Wednesday. Sony has noted that it doesn't know the degree to which personal data Relevant Products/Services might have been compromised.

'For Once We Didn't Do It'

On Monday, Seybold posted that he knows users are "waiting for additional information on when PlayStation Network and Qriocity services will be online." He added that, "unfortunately, I don't have an update or time frame."

With several major new game titles coming out this week, the timing of the outage is particularly suspicious. Many observers have speculated that the "external intrusion" was caused by the Anonymous hacker group.

But Anonymous denies involvement, while simultaneously posting updates to its Facebook page that suggest it could have been involved. On its AnonNews web site, where anyone can post, there is a notice dated Friday titled For Once We Didn't Do It. The posting noted that, while some individual Anons could "have acted by themselves," AnonOps was not involved and "does not take responsibility for whatever has happened."

The posting added that the "more likely explanation is that Sony is taking advantage of Anonymous' previous ill will toward the company to distract users from the fact that the outage is actually an internal problem with the company's servers."

'No Qualms'

However, on Anonymous' Facebook page, the page owner posted in a discussion of the outage last week that "we have no qualms about our actions."

In early April, several Sony sites were brought down by members of Anonymous, the hacker group known for its politically oriented online attacks. The sites included Sony.com, Style.com and the U.S. site for PlayStation.

Before the attacks on the Sony sites, Anonymous had announced it would target the company because of Sony's lawsuit against a user named George Hotz. Sony had filed and received a restraining order against Hotz and other hackers for allegedly jailbreaking the PlayStation 3 game console in order to run unauthorized software such as pirated games, and for providing software tools for others to do the same.

Sony had also sought and received access to Hotz' social-media accounts, and the IP addresses of visitors to his web site. The company also obtained access to his PayPal account to see donations in support Relevant Products/Services of his jailbreaking efforts.

In an open letter to Sony, Anonymous said Sony "abused the judicial system in an attempt to censor information on how your products work." It added that the company "victimized your own customers merely for possessing and sharing information," and said these actions meant Sony had "violated the privacy of thousands."

Sony and Hotz reached a settlement several weeks ago. The terms of the agreement were not made public, although Hotz agreed to a permanent injunction.

Read more...

Sunday, April 24, 2011

AMD's Radeon HD 6790: Coming Up Short At $150

The last couple of weeks after the recent GeForce GTX 550 Ti launch have been more eventful than I had initially been expecting. As you may recall the GTX 550 Ti launched at $150, a price tag too high for its sub-6850 performance. I’m not sure in what order things happened – whether it was a price change or a competitive card that came first – but GTX 550 Ti prices have finally come down for some of the cards.

The average price of the cheaper cards is now around $130, a more fitting price given the card’s performance.

The timing for this leads into today’s launch. AMD is launching a new card, the Radeon HD 6790, at that same $150 price point. Based on the same Barts GPU that powers the Radeon HD 6800 series, this is AMD’s customary 3rd tier product that we’ve come to expect after the 4830 and 5830. As we’ll see NVIDIA had good reason to drop the price on the GTX 550 if they didn’t already, but at the same time AMD must still deal with the rest of the competition: NVIDIA’s GTX 460 lineup, and of course AMD itself. So just how well does the 6790 stack up in the crowded $150 price segment? Let’s find out.

AMD Radeon HD 6870 AMD Radeon HD 6850 AMD Radeon HD 5830 AMD Radeon HD 6790 AMD Radeon HD 5770

Stream Processors 1120 960 1120 800 800

Texture Units 56 48 56 40 40

ROPs 32 32 16 16 16

Core Clock 900MHz 775MHz 800MHz 840MHz 850MHz

Memory Clock 1.05GHz (4.2GHz data rate) GDDR5 1GHz (4GHz data rate) GDDR5 1GHz (4GHz data rate) GDDR5 1050MHz (4.2GHz data rate) GDDR5 1.2GHz (4.8GHz data rate) GDDR5

Memory Bus Width 256-bit 256-bit 256-bit 256-bit 128-bit

VRAM 1GB 1GB 1GB 1GB 1GB

FP64 N/A N/A 1/5 N/A N/A

Transistor Count 1.7B 1.7B 2.15B 1.7B 956M

Manufacturing Process TSMC 40nm TSMC 40nm TSMC 40nm TSMC 40nm TSMC 40nm

Price Point ~$200 ~$160 N/A $149 ~$110

3rd tier products didn’t get a great reputation last year. AMD and NVIDIA both launched such products based on their high-end GPUs – Cypress and GF100 respectively – and the resulting Radeon HD 5830 and GeForce GTX 465 were eventually eclipsed by the GeForce GTX 460 that was cooler, quieter, and better performing at the same if not lower price. The problem with 3rd tier products is that they’re difficult to balance; 1st tier products are fully enabled parts that are the performance kings, and 2nd tier products are the budget minded parts that trade some performance for lower power consumption and all that follows.

While 2nd tier products are largely composed of salvaged GPUs that couldn’t make it as a 1st tier product, the lower power requirements and prices make the resulting video card a solid product. But where do 3rd tier products come from? It’s everything that couldn’t pass muster as a 2nd tier product – more damaged units functional units that won’t operate at lower voltages like a 2nd tier product. The GTX 465 and Radeon HD 5830 embodied this with power consumption of a 1st tier card and the performance of a last generation card, which made them difficult to recommend. This does not mean that a 3rd tier card can’t be good – the Radeon HD 4830 and GTX 260 C216 were fairly well received – but it’s a difficult hurdle to overcome.

Launching today is the Radeon HD 6790, the 3rd tier Barts part and like the rest of the Barts-based lineup, the direct descendent of its 5800 series counterpart, in this case the Radeon HD 5830. As is to be expected, the 6790 is further cut-down from the 6850, losing 2 SIMD units and half of its ROPs; mitigating this some are higher clockspeeds for both the core and the memory. With 800 SPs and 16 ROPs operating at 840MHz, on paper the 6790 looks a lot like a Radeon HD 5770 with a 256bit bus, albeit one that’s clocked slower given the 6790’s 1050MHz (4.2GHz data rate) memory clock.

From the 5830 we learned that losing the ROPs hurts far more than the SPs, and we’re expecting much of the same here; total pixel pushing power is halved, and MSAA performance also takes a dive in this situation. Overall the 6790 has 90% of the shading/texturing, 54% of the ROP capacity, half the L2 cache, and 105% of the memory bandwidth of the 6850. Or to compare it to the 5770, it has 98% of the shading/texturing capacity, 98% of the ROP capacity, and 175% of the memory bandwidth, not accounting for the architectural differences between Barts and Juniper.

Further extending the 5830 comparison, as with the 5830 AMD is leaving the design of the card in the hands of their partners. The card being sampled to the press is based on the 6870’s cooler and PCB, as the 6790’s 150W TDP is almost identical to the 151W TDP of the 6870, however like the 5830 no one will be shipping a card using this design. Instead all of AMD’s partners will be using their own in-house designs, so we’ll be seeing a variety of coolers and PCBs in use. Accordingly while we can still take a look at the performance of the card, our power, temperature, and noise data will not match any retail card – power consumption should be very close however.

At 150W AMD is skirting the requirement for 2 PCIe power sockets. Being based on a 6870 our sample uses 2 sockets and any other design using a 6870 PCB verbatim should be similar, but some cards will ship with only a single socket. This doesn’t impact the power requirements of the card – it’s roughly 150W either way – but it makes the card more compatible with lower-wattage PSUs that only come with 1 PCIe power plug.

Gallery: AMD Partner Radeon HD 6790 Designs

As we mentioned previously, AMD is launching the 6790 at $150. With the GTX 550’s price drop its direct competitor is no longer the GTX 550, but rather the closest competitor is now cheap GTX 460 768MB cards, which on average are about the same $150. AMD’s internal competition is the 6850, which averages closer to $160. Technically the Radeon HD 5770 is also competition, but with it going for around $110 after rebate, it’s far more value priced than the 6790 is.

Meanwhile the 6790 name also marks the first time we’ve seen the 6700 series in the retail market. In the OEM market AMD has rebadged the 5700 series as the 6700 series, however that change won’t ever be coming to the retail market, making this the only 6700 series card we’ll see. It’s a bit odd to see one series shared by two GPUs so significantly different, but AMD bases this on the fact that the 5770/6770 and the 6790 are so close in terms of specs; they want to frame the 6790 in terms of the 5770/6770, rather than in terms of the 6800 series. If nothing else it’s a nice correction for the poor naming of the 6800 series; a 6830 would have been the 5830 but slower.

April 2011 Video Card MSRPs

NVIDIA Price AMD

$700 Radeon HD 6990

GeForce GTX 580

$480

GeForce GTX 570

$320 Radeon HD 6970

GeForce GTX 560 Ti

$240 Radeon HD 6950 1GB

$200 Radeon HD 6870

GeForce GTX 460 1GB

$160 Radeon HD 6850

GeForce GTX 460 768MB

$150 Radeon HD 6790

GeForce GTX 550 Ti

$130

$110 Radeon HD 5770

Read more...

A Look At Triple-GPU Performance And Multi-GPU Scaling

It’s been quite a while since we’ve looked at triple-GPU CrossFire and SLI performance – or for that matter looking at GPU scaling in-depth. While NVIDIA in particular likes to promote multi-GPU configurations as a price-practical upgrade path, such configurations are still almost always the domain of the high-end gamer.

At $700 we have the recently launched GeForce GTX 590 and Radeon HD 6990, dual-GPU cards whose existence is hedged on how well games will scale across multiple GPUs. Beyond that we move into the truly exotic: triple-GPU configurations using three single-GPU cards, and quad-GPU configurations using a pair of the aforementioned dual-GPU cards. If you have the money, NVIDIA and AMD will gladly sell you upwards of $1500 in video cards to maximize your gaming performance.

These days multi-GPU scaling is a given – at least to some extent. Below the price of a single high-end card our recommendation is always going to be to get a bigger card before you get more cards, as multi-GPU scaling is rarely perfect and with equally cutting-edge games there’s often a lag between a game’s release and when a driver profile is released to enable multi-GPU scaling. Once we’re looking at the Radeon HD 6900 series or GF110-based GeForce GTX 500 series though, going faster is no longer an option, and thus we have to look at going wider.

Today we’re going to be looking at the state of GPU scaling for dual-GPU and triple-GPU configurations. While we accept that multi-GPU scaling will rarely (if ever) hit 100%, just how much performance are you getting out of that 2nd or 3rd GPU versus how much money you’ve put into it? That’s the question we’re going to try to answer today.

From the perspective of a GPU review, we find ourselves in an interesting situation in the high-end market right now. AMD and NVIDIA just finished their major pushes for this high-end generation, but the CPU market is not in sync. In January Intel launched their next-generation Sandy Bridge architecture, but unlike the past launches of Nehalem and Conroe, the high-end market has been initially passed over. For $330 we can get a Core i7 2600K and crank it up to 4GHz or more, but what we get to pair it with is lacking.

Sandy Bridge only supports a single PCIe x16 link coming from the CPU – an awesome CPU is being held back by a limited amount of off-chip connectivity; DMI and a single PCIe x16 link. For two GPUs we can split that out to x8 and x8 which shouldn’t be too bad. But what about three GPUs? With PCIe bridges we can mitigate the issue some by allowing the GPUs to talk to each other at x16 speeds and dynamically allocate CPU-to-GPU bandwidth based on need, but at the end of the day we’re splitting a single x16 lane across three GPUs.

The alternative is to take a step back and work with Nehalem and the x58 chipset. Here we have 32 PCIe lanes to work with, doubling the amount of CPU-to-GPU bandwidth, but the tradeoff is the CPU. Gulftown and Nehalm are capable chips on its own, but per-clock the Nehalem architecture is normally slower than Sandy Bridge, and neither chip can clock quite as high on average. Gulftown does offer more cores – 6 versus 4 – but very few games are held back by the number of cores. Instead the ideal configuration is to maximize performance of a few cores.

Later this year Sandy Bridge E will correct this by offering a Sandy Bridge platform with more memory channels, more PCIe lanes, and more cores; the best of both worlds. Until then it comes down to choosing from one of two platforms: a faster CPU or more PCIe bandwidth. For dual-GPU configurations this should be an easy choice, but for triple-GPU configurations it’s not quite as clear cut. For now we’re going to be looking at the latter by testing on our trusty Nehalem + x58 testbed, which largely eliminates a bandwidth bottleneck in a tradeoff for a CPU bottleneck.